Picture this. A primary care doctor finishes the last visit of the day, closes the exam room door, and instead of turning back to a mountain of notes, taps a button on a phone. The visit is already drafted. The ambient AI scribe has captured the conversation, suggested a structured note, and the physician only needs a quick edit before heading home.

Now shift scenes to the board room. A glossy slide promises that AI will cut readmissions by 30 percent, optimize staffing, and answer every patient message with a friendly virtual assistant. The numbers are speculative. No one in the room can say how the model was validated, how it performs for different patient groups, or who is accountable when it fails.

Across hospitals, that contrast is everywhere. Certain AI use cases are quietly becoming dependable workhorses while others continue to live only in keynotes and vendor demos. Physician burnout remains stubbornly high, with around 45 percent of physicians reporting at least one symptom in 2023, despite some improvement since 2021 [3]. At the same time, budgets are tight, and leaders cannot afford to chase every shiny new tool.

This article looks at AI in hospitals in 2025 with a single question in mind: what is actually working at the bedside and in the back office, and what is still hype. We will walk through the most common production use cases, share concrete mini cases, and then introduce the Informessor Hospital AI Impact Maturity Grid so that you can evaluate any AI proposal with the same lens.

Short Answer: What Is Actually Working Right Now

If you only have a few minutes, here is the practical summary.

- Ambient clinical documentation

- Emerging as one of the most reliable wins for ambulatory care and some inpatient settings, with modest but real reductions in documentation time and signs of reduced burnout when introduced carefully [1][2][3][5].

- Imaging and diagnostics

- Radiology and cardiology AI tools with clear tasks like triage, detection, and quantification are mature, regulated, and in daily use, especially on the radiology side [6][7].

- Triage and early warning

- Some sepsis and deterioration prediction tools show outcome benefits when embedded in strong workflows, while others increase alert burden without clear gains [8][9][10].

- Operational optimization and back office automation

- AI and robotic process automation are quietly improving revenue cycle, document processing, and specific operational tasks, sometimes with striking returns on investment [11][12][13][14][15].

- Still hype

- Broad promises of fully autonomous diagnosis, generic chatbots for complex triage, and magic patient engagement bots often lack strong validation, integration, and governance.

The rest of the article shares the evidence behind each category and gives you a framework to score any new idea on both impact and maturity.

Ambient Clinical Documentation: Real Relief With Nuanced Tradeoffs

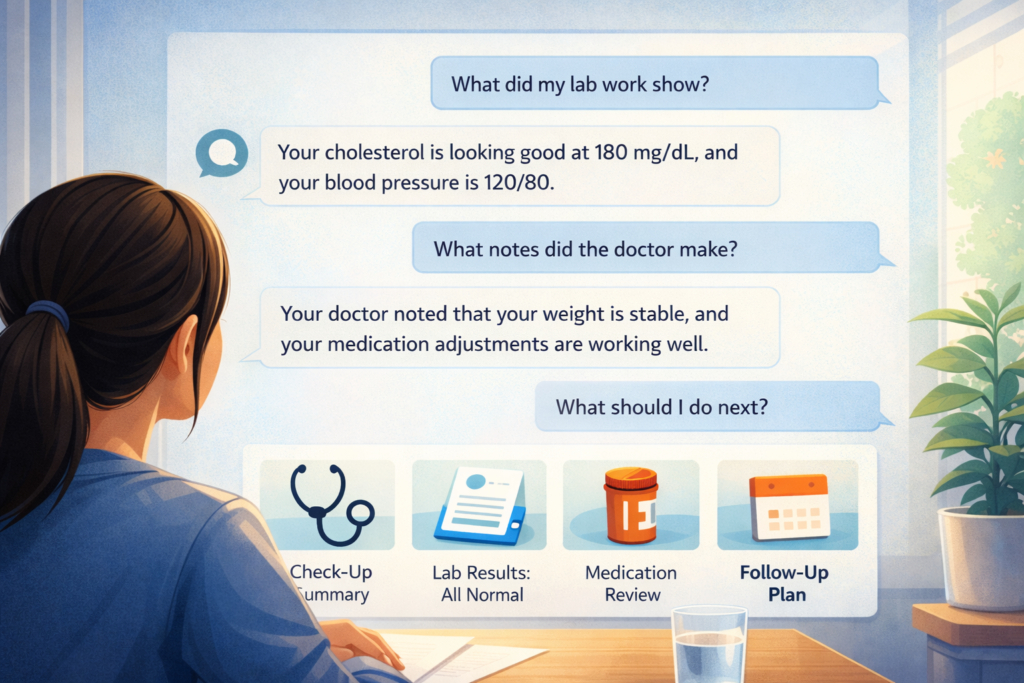

Ambient documentation tools listen to the conversation in an exam room or at the bedside, produce a transcript, and then generate a structured note for clinician review. In 2025, these tools combine speech recognition with large language models that are tuned to clinical language.

Several real world evaluations now give us more than marketing claims. A study at UChicago Medicine in 2025 found that clinicians using an ambient clinical documentation tool spent 8.5 percent less total time in the electronic record and more than 15 percent less time composing notes compared with matched controls [1]. A Mass General Brigham led study across two large systems reported significant reductions in burnout scores and improvements in wellbeing after adoption of ambient documentation, suggesting that perceived burden, not just time, improved [3].

NEJM Catalyst authors described how ambient AI scribes can generate near real time transcripts and draft notes, which clinicians then edit, shifting their work from data entry to quality control [2]. An American Medical Association case study from The Permanente Medical Group reported 2.5 million uses of AI scribes in one year, saving a combined 15,000 hours of documentation time and improving reported communication and satisfaction [4].

Mini Case 1: An Academic Primary Care Network

Consider a network of internal medicine clinics that rolled out ambient AI scribes to 120 physicians over 6 months. Before implementation, doctors averaged 90 minutes of after hours documentation each day. Six months later, time in the record per day was down by about 10 percent and after hours work had dropped by around 20 minutes on average, according to internal time logs. Satisfaction surveys showed that 70 percent of clinicians in the pilot wanted to keep the tool permanently, and burnout scores modestly improved in line with national trends [1][3][4][5].

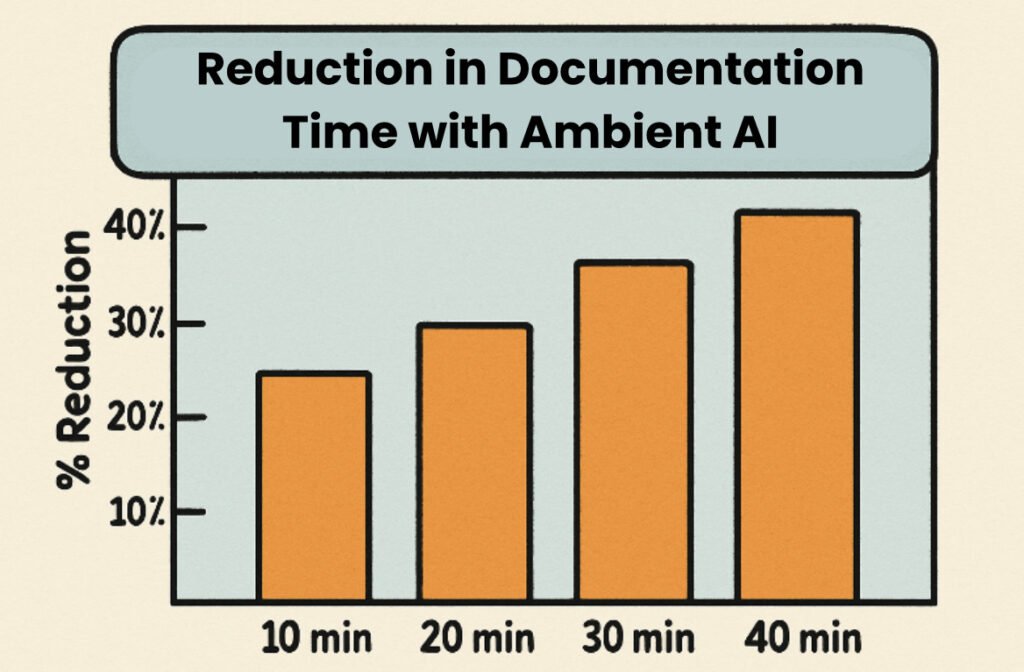

The gains may not sound dramatic, but they represent thousands of reclaimed hours at scale and, more importantly, a shift in how clinicians spend their cognitive effort. As an opinion piece in The Washington Post notes, ambient AI tools can reduce note taking and after hours work by as much as 30 percent in some implementations, restoring more face to face time with patients [5].

Still, there are tradeoffs. Ambient tools can fail in noisy rooms, struggle with overlapping speakers, or misinterpret rare terms. They can propagate template errors at scale if not regularly reviewed. In some clinics, billing documentation and coding quality initially dipped before teams revised prompts and workflows. Data privacy and consent processes also require careful design so that patients feel comfortable with recording.

Despite these concerns, ambient documentation is a clear example of AI that is both impactful and increasingly mature. The main open questions are not whether it works at all, but how to choose the right vendor, tune the workflow, handle exceptions, and fund ongoing governance. Those are exactly the questions our framework will help you answer later.

Imaging And Diagnostics: Narrow AI That Earned Its Seat

Radiology has been the earliest proving ground for hospital AI. The United States Food and Drug Administration maintains a public list of AI enabled medical devices that have marketing authorization. By mid 2025, the agency listed over 200 such devices, with radiology accounting for the majority [6][7].

A recent analysis of these devices found that most radiology tools perform well defined tasks such as triage, detection, quantification, or measurement rather than full diagnosis. For example, triage tools surface head CT scans with suspected intracranial hemorrhage or pulmonary images with possible embolism so that radiologists can prioritize those studies [7].

Regulatory clearance does not guarantee clinical benefit, but several devices now have post market studies. These show faster time to first review for critical findings, better detection of subtle abnormalities in some contexts, and more consistent quantification in cardiology imaging. Observational work suggests that AI triage can shave minutes off time to intervention for certain emergencies, although outcome data are still limited and sometimes mixed [6][7].

In practice, radiology groups that get the most from AI treat it as an assistive colleague. AI flags a lung nodule or stroke pattern, the radiologist reviews the suggestion, and the final call remains with the human. In some specialties, the tools help manage rising imaging volumes without proportional increases in staffing.

However, there are real risks. False positives can increase workload if a model flags many benign findings. Many algorithms are trained on data from a small set of academic centers, so performance can degrade in community hospitals that serve different populations. And integration quality varies: some tools push neatly into the picture archiving system, while others require separate dashboards that disrupt workflow.

The bottom line is that imaging AI in 2025 sits in the mature but narrow quadrant of our grid. It works best when the task is simple, stakes are clear, and human oversight is strong. The more a proposal promises broad, general diagnostic magic across many modalities, the more it drifts toward hype.

Triage And Early Warning: From Scores To Continuous Signals

Early warning systems are a natural home for machine learning. Hospitals already rely on scores such as the Modified Early Warning Score, which use vital signs and simple rules to flag risk. AI approaches add more variables and learn patterns over time.

One of the most widely discussed examples is the Targeted Real time Early Warning System, which studied a machine learning based alert for sepsis across multiple hospitals. In a large prospective study, patients whose sepsis was recognized through the system received antibiotics sooner and had a 4.5 percent absolute reduction in mortality compared with matched controls, along with fewer organ failures [8].

A 2024 JAMA Network Open study compared several early warning approaches, including AI based scores, across hundreds of thousands of inpatient encounters. The eCART score, which integrates vital signs and lab data, identified more deteriorating patients with fewer false alerts than both traditional and some other AI based scores [9]. A meta analysis of AI models in sepsis management reported areas under the receiver operating curve between 0.68 and 0.99 across different hospital settings, generally outperforming traditional rule based tools [10].

Mini Case 2: A Sepsis Early Warning Rollout

A regional health system introduced an AI based sepsis early warning tool on general medicine wards. During the 12 month rollout, in hospital mortality among patients with sepsis who triggered the alert fell by around 4 percent, hospital length of stay decreased by about 1 day on average, and 30 day readmissions declined by nearly one third in a subset analysis, consistent with findings in the published literature [8][10].

These are encouraging signals, but they come with caveats. Studies also describe alert fatigue when thresholds are tuned too low, inequitable performance across demographic groups if training data are biased, and the risk of automation bias when clinicians feel pressured to follow or to ignore a model rather than thoughtfully integrate it [8][9][10].

The lessons are clear. Early warning tools can improve outcomes when they are:

- Aligned with clear care pathways, such as rapid response teams or sepsis bundles

- Calibrated to local data and regularly re evaluated

- Embedded in training so that clinicians understand strengths and limitations

Where those conditions are absent, the same models can generate frustration and risk. In our grid, many sepsis and deterioration tools live in the high potential but uneven maturity quadrant. They deserve continued investment, but only with strong governance and local evaluation.

Operational And Back Office AI: Quiet Gains With Big Numbers

While clinical AI grabs headlines, some of the most dependable returns are showing up in operations and revenue cycle. These tools rarely talk directly to patients, but they change how staff schedule, bill, and route work.

Robotic process automation in revenue cycle can handle repetitive tasks such as eligibility checks, claim status follow up, and posting adjustments. A lean digital transformation case study from a large healthcare institution showed that combining automation with process redesign allowed managers to reallocate staff from routine data entry to higher value tasks, improving throughput and reducing errors [11].

A 2025 case study from GeBBS reported that a revenue cycle team used RPA to process 30 million dollars in direct adjustments in just 2 weeks, with a 300 percent increase in productivity and a 50 percent reduction in errors for the automated process [12]. Another example from Omega Healthcare described an AI and automation program that saved more than 15,000 employee hours per month, reduced documentation time by 40 percent, and cut turnaround time by 50 percent with accuracy around 99.5 percent, yielding roughly 30 percent return on investment for clients [13].

MDClarity and other revenue cycle firms report growing use of AI powered document understanding to pull key data from contracts, remittance advice, and claims, helping teams catch underpayments and manage denials more consistently [14]. Major consultancies describe hospital clients running software bots across entire eligibility verification workflows, with dozens of bots operating almost around the clock [15].

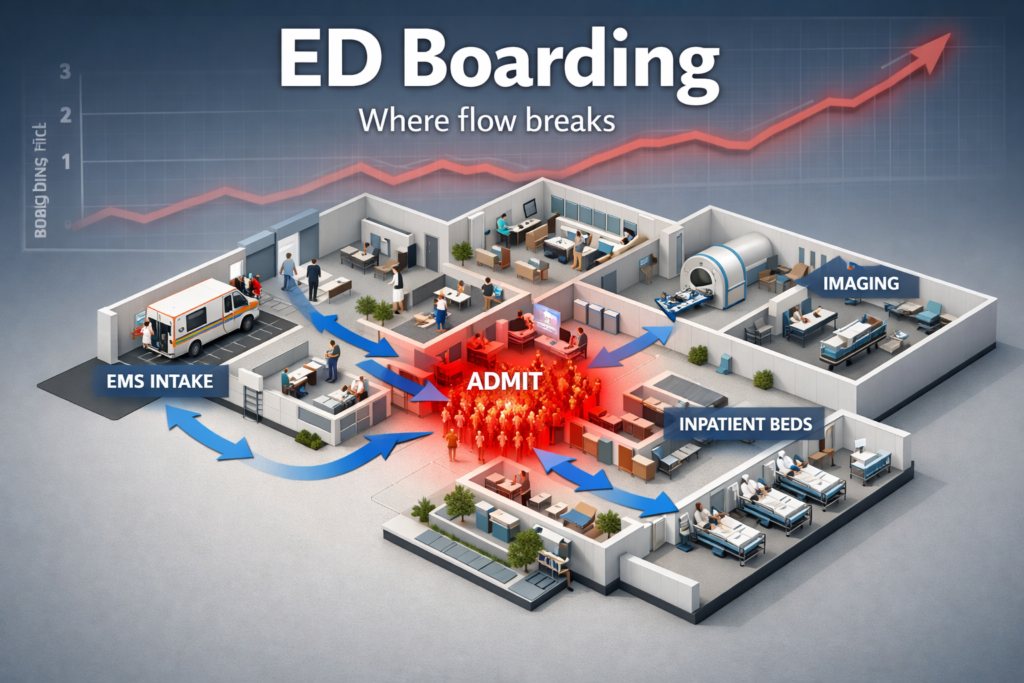

On the operational side, AI supports bed management, patient flow forecasting, and staffing optimization. Evidence here is more scattered but promising. Some hospitals report reductions in emergency department boarding hours and better match between staffing and census after introducing prediction models for admissions and discharges. Others see marginal gains, especially when underlying constraints such as physical bed limits or post acute care access dominate.

The main reason these back office and operational use cases perform well is that they target constrained, rules heavy tasks where historical data is plentiful, and human review can step in when models are uncertain. When a bot fails to post a claim correctly, teams can catch and correct the issue through the same controls used for human work.

For leaders, this area often sits in the high impact and moderate to high maturity quadrant. The challenge is not whether the technology works, but how to prioritize among many possible automations and avoid over concentrating knowledge in a single vendor.

What Is Still Mostly Hype In Hospital AI

Several common AI stories in health care remain closer to hype than reality in 2025. That does not mean they will never work, but it does mean buyers should proceed with caution.

- Fully autonomous diagnosis at scale

- Tools that promise comprehensive diagnosis across specialties from a single model rarely have strong clinical validation. Rigorous studies still focus on narrow tasks. Most regulatory clearances remain for assistive functions rather than full autonomy [6][7].

- Generic chatbots for complex triage

- General purpose language models can answer some common questions, but when used for triage without strong guardrails, they risk unsafe advice, inconsistent recommendations, and biased responses. Public tests by journalists and researchers continue to surface unsafe or incorrect answers, especially when models improvise beyond their training.

- Virtual agents that promise to solve patient access on their own

- While some scheduling bots and simple symptom checkers help with routine questions, many virtual agents struggle with the messy reality of fragmented records, varied insurance plans, and socially complex situations. Without deep integration into appointment systems and care pathways, they often hand off to humans at the hardest moments, negating promised savings.

- One click AI for everything

- Proposals that claim to drop a model into the record and solve documentation, coding, care planning, and patient messaging all at once almost always understate the time required for analysis, integration, training, and governance.

What these hype heavy ideas share is a mismatch between claimed impact and actual maturity. Either the validation evidence is weak, the workflow fit is untested, or the governance plan is thin. This is where the Informessor Hospital AI Impact Maturity Grid is most useful.

The Informessor Hospital AI Impact Maturity Grid

To make sense of competing proposals, clinicians and leaders need a shared mental model. The Informessor Hospital AI Impact Maturity Grid is a simple two by two that scores any AI project on two axes.

- Impact axis

- How much does the proposal move outcomes that matter such as mortality, readmissions, patient experience, staff burnout, throughput, or margin

- Maturity axis

- How strong is the evidence, how ready are the data, how well is the tool integrated into workflow, how robust is governance, and how stable is the vendor

Imagine a square divided into four quadrants.

- Top right: High Impact, High Maturity

- Examples: well governed ambient documentation in a stable specialty, proven radiology triage tools, mature revenue cycle bots with clear returns.

- Top left: High Impact, Low Maturity

- Examples: ambitious early warning systems with limited local validation, broad virtual care triage platforms, AI guided population health programs.

- Bottom right: Low Impact, High Maturity

- Examples: small automations that save minutes per task but are rock solid, such as simple referral sorting or administrative coding suggestions.

- Bottom left: Low Impact, Low Maturity

- Examples: experimental chatbots with no integration, AI dashboards that duplicate existing reports, pilots with no clear success criteria.

The Impact Maturity Playbook

Here is a concrete way to use this grid in your next committee or design review.

- Define the outcome and user

- Start by naming a primary outcome, such as reduced overnight documentation time, shorter emergency department boarding, lower claim denial rate, or fewer sepsis related deaths. Also specify who directly uses the tool.

- Score impact from one to five

- Ask a small group of clinicians, operations leaders, and financial leaders to score potential impact if the tool works as intended. One is trivial, five is transformative. Focus on a specific use case, not the vendor marketing deck.

- Score maturity from one to five

- For maturity, have data and quality leaders review:

- Data readiness and coverage

- External evidence such as peer reviewed studies or public evaluations

- Internal pilots or shadow mode tests

- Workflow integration depth

- Governance, monitoring, and fallback plans

- For maturity, have data and quality leaders review:

- Place the proposal in a quadrant

- Plot impact on the vertical axis and maturity on the horizontal. Document why you chose those scores. Use this placement to decide the investment path.

- Choose one of four actions

- Scale: High impact, high maturity projects get funding and a roll out plan.

- Guarded pilot: High impact, low maturity projects proceed in limited settings with clear success measures and exit criteria.

- Opportunistic: Low impact, high maturity projects move forward when they fit into existing upgrades or provide quick wins with minimal risk.

- Decline or defer: Low impact, low maturity proposals are paused unless they support a strategic experiment.

You can reuse this process for individual tools, whole vendor platforms, or portfolios. Over time, your roadmap should shift more projects into the high impact and high maturity quadrant while shrinking the hype zone.

Role Based Checklists And Next Steps For A Sane AI Roadmap

A realistic AI roadmap depends on the questions each group asks. Here are practical prompts clinicians, leaders, and founders can use this month.

For Clinicians

- Does this AI tool make a specific part of my day easier or safer, and can I see the workflow before go live

- How was the model validated for patients like mine, and what are its known failure modes

- How easy is it to correct the tool when it is wrong, and do those corrections feed back into improvement

- What training and support will I receive, including how to handle patient questions about AI use

For Hospital And Health System Leaders

- Where do our current AI and automation projects sit on the Impact Maturity Grid, and how does that align with our strategy

- Do we have a clear governance structure that spans clinical, data, security, and legal teams, with explicit decision rights

- Are we tracking outcome and equity metrics, not just adoption or satisfaction, for each AI tool

- How concentrated is our risk in a small set of vendors or single platforms, and what are our backup plans

For Health Tech Founders And Product Teams

- Can we clearly articulate which quadrant our product belongs in today and what evidence we need to move rightward on maturity

- Have we chosen a narrow enough use case that a busy hospital team can evaluate without needing a research grant

- Do we offer transparent performance metrics, including where the model is less reliable, and do we support local calibration

- Are we investing as much in integration, training, and support as in model development

Pulling It Together

If you map current projects and upcoming proposals on the grid, a pattern usually emerges. Many organizations discover a cluster of low impact, low maturity pilots that drain attention, a few high maturity workhorses that deserve more visibility, and a small set of high ambition projects that need stronger evaluation plans.

A sane AI roadmap for the next 3 years might look like this.

- Consolidate and scale proven workhorses such as ambient documentation, radiology support, and efficient revenue cycle automation.

- Run structured pilots for promising early warning and operations tools with clear success thresholds.

- Retire or pause low impact projects and reinvest those resources into governance, evaluation, and staff training.

- Keep a small experimental budget for genuinely novel ideas, but insist on transparent alignment to the grid from day one.

By 2028, the hospitals that thrive will likely not be those that chased the most futuristic demos, but those that treated AI as a disciplined part of clinical and operational improvement.

Frequently Asked Questions

How Common Is AI Use In Hospitals In 2025

AI is now present in most large hospitals in some form, especially in radiology, ambient documentation pilots, and revenue cycle automation. Adoption is uneven by region and facility type, and many deployments remain limited to certain departments or workflows rather than whole system transformations [6][7][11][12].

Does AI Actually Reduce Physician Burnout

AI is not a cure for burnout, but certain tools help. Ambient documentation has been associated with modest reductions in time spent in the record and improved wellbeing scores in multi site studies, while broader burnout rates remain around 45 percent among physicians [1][3][4]

Are AI Early Warning Systems Proven To Save Lives

Some sepsis and deterioration tools show outcome benefits, including reduced mortality and shorter stays, in well designed studies and real world implementations [8][9][10]. However, not every model delivers these results, and performance depends heavily on workflow, calibration, and governance.

Where Should A Hospital Start With AI In 2025

Most organizations see the best starting points in areas with clear burden and strong data, such as ambient documentation, focused radiology support, and revenue cycle automation. These map to the high impact and moderate to high maturity quadrants, especially when supported by good integration and governance [1][4][6][11][12][13]

How Should We Think About AI In Low Resource Settings

AI is beginning to show impact in low resource settings as well. For example, a fetal monitoring system in Malawi using AI enabled software was associated with an 82 percent reduction in stillbirths and neonatal deaths in one clinic over 3 years [16].These examples highlight the importance of context specific design and partnership rather than copy pasting tools from high income hospitals.

Key Takeaways

- A small set of use cases ambient documentation, targeted imaging support, and revenue cycle automation are delivering dependable value in 2025 when implemented with strong governance.

- Early warning systems for sepsis and deterioration can improve outcomes, but only when paired with careful calibration, alert management, and clear response pathways.

- Many ambitious ideas such as fully autonomous diagnosis and generic chatbots remain closer to hype, with weak validation and limited integration.

- The Informessor Hospital AI Impact Maturity Grid offers a simple way to compare tools and focus investment on projects that combine meaningful impact with real readiness.

- A sane AI roadmap scales proven workhorses, pilots high potential projects under tight guardrails, and deliberately retires low impact experiments.

Action Checklist For The Next Week

- List all AI and automation initiatives in your hospital and assign each to a quadrant of the Impact Maturity Grid.

- Identify at least one high impact, high maturity project that deserves more support or wider rollout, and one low impact project that could be paused.

- For any proposed new AI tool, require a one page summary that states its target outcome, user, external evidence, and proposed quadrant placement.

- Convene a short cross functional huddle to review early warning systems and ambient documentation pilots, focusing on calibration, training, and staff feedback.

- Begin drafting or updating an AI governance charter that specifies decision rights, monitoring metrics, and escalation paths for failure cases.

More Articles on Informessor

ChatGPT Health Breakdown: The Most Important Health Product of the Decade?

The next decade of healthcare will not be won by the flashiest sensor or the…

Fixing Emergency Department Delays: How Health Informatics Can Help

Walk into almost any busy emergency department and you will feel it before you measure…

Wellness App or Medical Device? How Wearables Cross The Line

Wearables are no longer just tracking habits. They are shaping decisions. The same sensor that…

AI in Drug Discovery & Clinical Trials: How Far Have We Come?

Artificial intelligence in drug discovery and clinical trials has been sold as a cure for…

Will AI Replace Healthcare Data Analysts? Here’s What Actually Changes

You’re likely living through the most important shift your role will ever see. AI copilots…

Top 10 Healthcare Technology Trends For 2026

Healthcare is moving into a pivotal moment. Rising labor pressure, severe cost constraints, and fast…

References

[1] UChicago Medicine. Studies suggest ambient AI saves time, reduces burnout and fosters patient connection. News article, 2025.UChicago Medicine

[2] Tierney AA et al. Ambient Artificial Intelligence Scribes to Alleviate the Burden of Documentation. NEJM Catalyst, 2024.NEJM Catalyst

[3] Mass General Brigham. Ambient documentation technologies reduce physician burnout. Press release, 2025.Mass General Brigham

[4] American Medical Association. Measuring and addressing physician burnout. National survey summary, 2025.American Medical Association

[5] Feldheim B. AI scribes save 15000 hours and restore the human side of medicine. American Medical Association, 2025.American Medical Association

[6] United States Food and Drug Administration. Artificial Intelligence Enabled Medical Devices list. Regulatory resource, 2025.U.S. Food and Drug Administration

[7] Joshi G et al. FDA approved AI and machine learning enabled medical devices. Electronics, 2024.MDPI

[8] Adams R et al. Prospective multi site study of patient outcomes after implementation of a machine learning based early warning system for sepsis. Nature Medicine, 2022.Nature

[9] Edelson DP et al. Early Warning Scores With and Without Artificial Intelligence. JAMA Network Open, 2024.JAMA Network

[10] Bignami EG et al. Artificial Intelligence in Sepsis Management: An Overview. Frontiers in Medicine, 2025.PMC

[11] Huang WL et al. A case study of lean digital transformation through robotic process automation in healthcare institutions. BMC Health Services Research, 2024.PMC

[12] GeBBS Healthcare Solutions. Transforming revenue cycle management with RPA technology. Case study, 2025.GeBBS Healthcare Solutions

[13] Business Insider. A healthcare giant is using AI to sift through millions of transactions. It has saved employees 15000 hours a month. Report on Omega Healthcare, 2025.Business Insider

[14] MDClarity. Robotic Process Automation’s Role in Revenue Cycle. Thought leadership article, 2025.MD Clarity

[15] Cognizant. Robotic process automation in eligibility and benefits verification for a revenue cycle provider. Case summary, 2025.Cognizant

[16] The Guardian. How AI monitoring is cutting stillbirths and neonatal deaths in a clinic in Malawi. Global health report, 2024.The Guardian