The next decade of healthcare will not be won by the flashiest sensor or the cleverest algorithm. It will be won by whoever makes health information finally feel coherent to ordinary people, without turning privacy into collateral damage. In that sense, ChatGPT Health arrives with a bold promise: not better data, but a better relationship with your data, through conversation. [1] [2]

Quick Answer:

ChatGPT Health is a dedicated space inside ChatGPT for health and wellness questions that can be grounded in your own records and connected apps. It is designed to help people understand information, spot patterns, and prepare for clinician visits while adding extra data separation and controls. The upside is comprehension and follow through. The downside is misplaced trust and expanded privacy risk. [1] [2]

What ChatGPT Health Is & Why It Exists

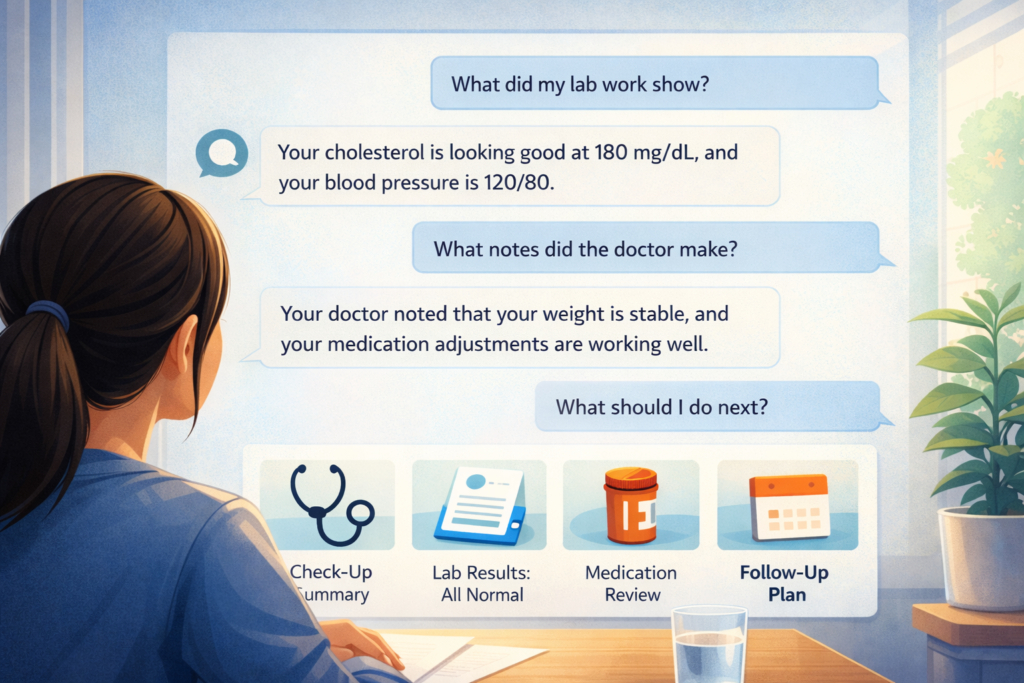

Healthcare is full of “almost answers.” A portal shows your lab values, but not what changed, what matters, and what questions you should ask next. A visit summary documents decisions, but does not help you retell your story clearly at the next appointment. A wearable charts your sleep, but not why your symptoms felt worse on the same weeks your sleep cratered.

OpenAI says people already ask health and wellness questions at enormous scale, and the product is meant to respond to that reality with a purpose built experience rather than leaving those conversations mixed into general chat. [1] That framing matters. This is not marketed as clinical care. It is marketed as support for understanding, planning, and navigating, with clinicians still central. [1] [2]

If you are a healthcare leader, a clinician, a data team member, or a product manager, the implication is bigger than the consumer feature list. A conversational layer over personal health information changes how people form expectations. It shifts the default from searching for generic guidance to interrogating your own timeline, in your own words. That can make patients better prepared, and it can also make them more convinced they already know the answer.

That tension is the entire story.

How It Works at a Product Level

At the product level, “Health” is not just a topic filter. OpenAI describes it as a dedicated experience with additional protections designed specifically for health conversations. [1] In the Help Center documentation, Health is presented as its own area with separate chats, files, and memory. Crucially, information created inside Health does not automatically flow into your normal chat experiences. [2]

The separation is asymmetric in a way that is easy to miss. Health conversations and Health memories are not used to inform non Health chats. Non Health chats also cannot reach into Health to access its files or memories. [2] That reduces accidental bleed over, like a sensitive lab result shaping unrelated conversations later.

At the same time, OpenAI also describes an option where Health can use relevant context from your main ChatGPT experience to make Health responses more relevant. [2] That makes sense for personalization, but it introduces a design tradeoff: the more useful the assistant becomes, the more it wants context. The more context it has, the more careful you must be about what you allow.

Another important product detail is the “funnel.” If you start a health related conversation outside of Health, ChatGPT can prompt you to move it into the Health experience so those additional protections apply. [2] That is OpenAI quietly admitting what clinicians already know: people will use the easiest interface available, whether or not it is the safest one.

Finally, the product is not purely about chat. OpenAI notes Health supports web search, voice, and uploading files, which is critical because health questions often begin with a screenshot, a PDF, or a portal export. [2] The most consequential health workflows are document workflows.

What You Can Connect & What That Enables

The feature set is simple to describe and surprisingly complex to govern.

OpenAI states you can connect medical records and wellness apps to ground conversations in your own health information. [1] In the Help Center, OpenAI lists connections that include Apple Health and third party wellness apps such as Peloton, MyFitnessPal, Function, Instacart, AllTrails, and WeightWatchers. [2] OpenAI also states that connecting medical records is available only for eligible users in the United States who are 18 or older. [2]

The medical records piece is especially important because it changes the dominant failure mode. A generic chatbot fails because it lacks context. A health chatbot connected to your records can fail because the context is incomplete, messy, or misinterpreted.

OpenAI says the medical record connection is powered through a partnership with b.well, and the Help Center describes b.well as the underlying health data connectivity infrastructure for medical records in Health. [2] Public announcements around the partnership describe b.well’s connectivity as consumer mediated, with a focus on consent, identity verification, and secure exchange mechanisms. [3]

So what does this enable in practice?

First, it can turn scattered artifacts into plain language. OpenAI explicitly positions Health as helping users understand test results and prepare for appointments. [1] Second, it can assemble a coherent narrative across symptoms, medications, history, and day to day signals, which is how many clinicians think but many portals do not. Third, it can reduce friction by turning a plan into an action, like translating a meal plan into a grocery order via an integration. [2]

The capability is not magic. It is orchestration, data access, and summarization. But in healthcare, orchestration is often the difference between intention and follow through.

Privacy & Security in Plain Language

This is where the product either becomes the decade’s most important health interface or a cautionary tale.

OpenAI says Health adds layered protections, including purpose built encryption and isolation to keep health conversations protected and compartmentalized. [1] The Help Center reinforces the compartment model: Health lives separately, and Health information is not used to contextualize non Health chats. [2]

OpenAI also states that by default, Health chats and Health data are not used to improve OpenAI foundation models. [1] [2] That is a meaningful commitment because training use is one of the most common user fears.

Still, “not used to train” is not the same as “never processed” and not the same as “no risk.” Like other online services, data may be processed to provide, maintain, and protect the service, and OpenAI’s broader privacy policy describes sharing with vendors and service providers for purposes such as security and support. [4]

Third party integrations also expand the trust boundary. OpenAI notes that connected apps are off by default and require explicit connection. [2] OpenAI also notes that apps may receive limited technical information such as IP address and approximate location when connected, which is standard for many integrations but worth saying plainly. [2] Every added pathway is another place governance must be intentional, not assumed.

For users, the most practical privacy question is not “is it private,” but “what is the minimum I can share to accomplish my goal today.” That principle becomes essential in the playbook section.

Safety and Quality Work OpenAI Points To

OpenAI points to HealthBench as a major part of its safety and quality story, describing it as a clinician designed evaluation that uses physician written rubrics and realistic health conversations to judge model behavior, not just factual recall. [5] OpenAI’s HealthBench paper describes 5,000 multi turn conversations and rubrics created by 262 physicians, targeting dimensions like accuracy, safety, uncertainty handling, and communication. [6]

This is real progress in evaluation, because healthcare quality is not only about being correct. It is also about knowing when you do not know, asking for missing context, and escalating when symptoms sound urgent.

But evaluation is not deployment. A benchmark can raise the floor. It cannot eliminate worst case behavior, especially when inputs are incomplete, when users summarize badly, or when the model is asked to make a decision it should never make alone. The product must be treated as a support layer, not an authority layer.

Disadvantages, Concerns, and Ethical Tensions

The easiest way to think about the risk is to separate it into three buckets: privacy risk surface, accuracy and misuse risk, and social effects.

Privacy & Security Risk Surface

Centralizing sensitive health information increases impact if anything goes wrong. This is not a claim about OpenAI specifically. It is a general property of consolidation. A single place that becomes your “health hub” becomes a higher value target.

The governance challenge is that consumer products live in a different regulatory and operational universe than traditional care delivery. HIPAA applies to covered entities like many providers, health plans, and clearinghouses, plus business associates working on their behalf. Many consumer health apps and services fall outside HIPAA, even when they handle sensitive health data. [7] [8] That gap is one reason the Federal Trade Commission has emphasized enforcement and rules around personal health records and related entities, including breach notification obligations under the Health Breach Notification Rule. [9]

A concrete example: the FTC’s enforcement action against GoodRx focused on allegations around sharing sensitive health information for advertising and related privacy representations. [10] Whether or not a product is “health adjacent,” the lesson for leaders and users is consistent: data flows that feel invisible can become the story.

Accuracy & Misuse Risk

Large language models can generate plausible answers that are incomplete, wrong, or overconfident. In medicine, plausible is dangerous. The more “helpful” a summary sounds, the more likely it is to be trusted without verification.

A sobering nuance is that these systems can produce excellent communication even when they are not reliably correct. A 2023 study in JAMA Internal Medicine found that clinicians preferred chatbot responses over physician responses for quality and empathy in a set of public forum questions. [11] That does not mean the chatbot was always clinically correct. It means the communication style can be persuasive, which can amplify misuse if the output is treated as a final answer.

This is especially risky with messy records. Clinical notes include negations, copy forward fragments, and context that lives in the clinician’s head, not the text. A model asked to summarize may omit the one detail that changes the interpretation. Or it may interpret normal variation in sleep or heart rate as a “trend” that deserves concern. The risk is not constant error. It is occasional error that arrives wrapped in confidence.

Ethical Concerns That Make This Controversial

Consent and comprehension sit at the center. A user can click through permissions quickly without understanding what persists in chat history, what is stored in memory, or what it means to connect another company’s app. OpenAI provides controls, but controls do not guarantee comprehension. [2]

Equity is another tension. People with fragmented records, limited portal access, or lower health literacy may get weaker results. A system grounded in your data can only be as coherent as your data access. If your record is split across multiple systems, or if you lack access to key notes, the assistant may confidently summarize an incomplete picture.

Commercial influence is the third tension. OpenAI describes connections to wellness apps and services. [2] Integrations can reduce friction, but they can also create subtle gravitational pull toward partner ecosystems. Even if no one intends it, convenience becomes persuasion. This is not an accusation. It is a product law: default pathways shape outcomes.

Social & Behavioral Concerns

There is also the “always monitoring” problem. When people track more, they often worry more. Normal variation becomes a signal to interpret, and interpretation becomes a habit. Over time, that can increase health anxiety and reduce trust in one’s own body cues.

The deeper worry for clinicians is conversational substitution. If a patient spends 30 minutes clarifying symptoms with a chatbot, they may feel “heard” and then shorten the clinician visit, reducing shared decision making. Or they may arrive with a fixed narrative that is harder to reframe, even when new evidence appears.

If this product becomes widely adopted, the clinical skill that matters even more will be reframing: validating the goal, then redirecting to evidence, uncertainty, and next steps.

Advantages & Why Adoption Could Be Fast

The adoption case is straightforward: ChatGPT Health reduces cognitive load.

First, it can summarize and translate medical language into plain language. OpenAI explicitly positions the product as helping users understand test results and feel more prepared for care. [1] That is a massive unlock for patients who feel overwhelmed by portal jargon.

Second, it can improve appointment preparation. If the assistant turns a messy story into a timeline and a question list, you get better visits. The clinician spends less time collecting basic context and more time interpreting. That is not just convenience. It is throughput and quality.

Third, it can enable longitudinal pattern spotting across everyday signals like sleep, movement, and nutrition when connected. OpenAI emphasizes grounding responses in personal context and connected data. [1] [2] For many conditions, behavior and symptoms are linked in ways that are hard to see in a week to week blur. A conversation that surfaces patterns can help people change behavior, and behavior change is where most “health products” fail.

Fourth, the product level separation is genuinely better than dumping health details into general chat. The compartment design, explicit connection flows, and Health specific memory controls are meaningful safeguards. [2]

This is why adoption could be fast: the value shows up immediately, in the form of clarity. The risk shows up later, in the form of trust drift. That is why disciplined usage matters.

Verdict

So, is this the most important health product of the decade?

It might be, but not because it diagnoses anything. It might be because it turns health information into conversation, and conversation into action. OpenAI frames Health as helping people feel more informed, prepared, and confident, with extra compartment protections and optional connections to records and wellness apps. [1] [2]

The highest upside is comprehension and preparation. The highest risk is misplaced trust plus expanded privacy and governance complexity. Those two truths can coexist.

If you adopt one habit, adopt this: treat the output as a draft for your next clinician conversation, not a verdict. If you adopt one principle, adopt minimal necessary sharing.

Key Takeaways

- ChatGPT Health creates a dedicated, compartmented space for health conversations with optional connections to medical records and wellness apps. [1] [2]

- The main value is clarity and appointment preparation, especially summarizing documents into plain language and timelines. [1]

- The main risks are privacy surface expansion through consolidation and integrations, plus overtrust in persuasive summaries. [2] [4] [11]

- Consumer health data governance differs from regulated care settings, so teams should plan for consent clarity, equity gaps, and data flow transparency. [7] [9]

More Articles on Informessor

ChatGPT Health Breakdown: The Most Important Health Product of the Decade?

The next decade of healthcare will not be won by the flashiest sensor or the…

Fixing Emergency Department Delays: How Health Informatics Can Help

Walk into almost any busy emergency department and you will feel it before you measure…

Wellness App or Medical Device? How Wearables Cross The Line

Wearables are no longer just tracking habits. They are shaping decisions. The same sensor that…

AI in Drug Discovery & Clinical Trials: How Far Have We Come?

Artificial intelligence in drug discovery and clinical trials has been sold as a cure for…

Will AI Replace Healthcare Data Analysts? Here’s What Actually Changes

You’re likely living through the most important shift your role will ever see. AI copilots…

Top 10 Healthcare Technology Trends For 2026

Healthcare is moving into a pivotal moment. Rising labor pressure, severe cost constraints, and fast…

References

[1] OpenAI. “Introducing ChatGPT Health.”

[2] OpenAI Help Center. “ChatGPT Health.”

[3] b.well Connected Health. “OpenAI Selects b.well to Power Secure Health Data Connectivity for AI Driven Health Experiences in ChatGPT.”

[4] OpenAI. “Privacy Policy.”

[5] OpenAI. “Introducing HealthBench.”

[6] Arora RK, Wei J, Soskin Hicks R, et al. “HealthBench: Evaluating Large Language Models Towards Improved Human Health.” OpenAI PDF.

[7] US Department of Health and Human Services, Office for Civil Rights. “Covered Entities and Business Associates.”

[8] World Health Organization. “Ethics and Governance of Artificial Intelligence for Health.”

[9] Federal Trade Commission. “Health Breach Notification Rule.”

[10] Federal Trade Commission. “GoodRx Holdings, Inc. Case and Proceedings.”

[11] Ayers JW, et al. “Comparing Physician and Chatbot Responses to Patient Questions.” JAMA Internal Medicine.